We failed. I recently attended the Knowledge Gap conference, where we had several discussions related to data modeling. We all agreed that we are in a distressful situation both concerning the art as a whole but also its place in modern architectures, at least when it comes to integrated data models. As an art, we are seeing a decline both in interest and schooling, with practitioners shying away from its complexity and the topic disappearing from curriculums. Modern data stacks are primarily based on shuffling data and new architectures, like the data mesh, propose a decentralized organization around data, making integration an even harder task.

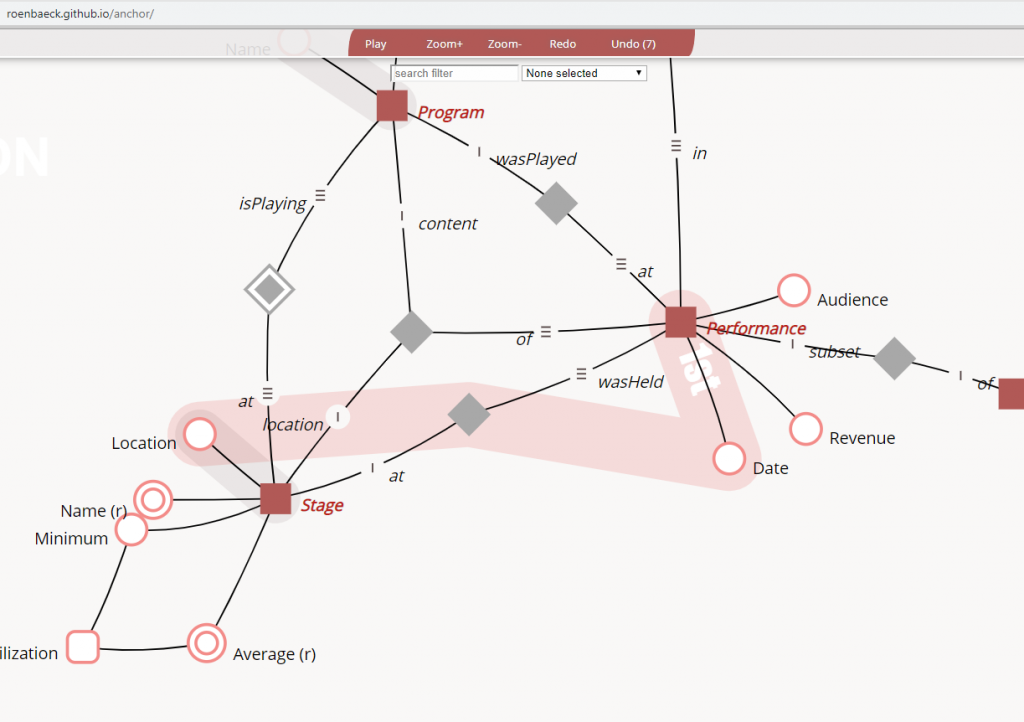

When I say we failed, it is because data modeling in its current form will not take off. Sure we have successful implementations and modelers with both expertise and experience in Ensemble Modeling techniques, like Anchor modeling, Data Vault and Focal. There is, however, not enough of them and as long as we are not the buzz, opportunities to actually prove that this works and works well will wane. We tried, but we’re being pushed out. We can push back, and push back harder, but I doubt we can topple the buzzwall. I won’t stop pushing, but maybe it’s also time to peek at the other side of the wall.

If we begin to accept that there will only be a select few who can build and maintain models, but many more who will push data through the stack or provide data products, is there anything we can do to embrace such a scenario?

Data Whisperers

Having given this some thought I believe I have found one big issue, preventing us from managing data in motion as well as we should. Every time we move data around we also make alterations to its representation. It’s like Chinese Whispers (aka the telephone game) in which we are lucky to retain the original message when it reaches the last recipient, given that the message is whispered from each participant to the next. A piece of information is, loosely speaking, some bundle of stuff with a possible semantic interpretation. What we are doing in almost all solutions today is to, best we can, pass on and preserve the semantic interpretation, but care less about the bundle it came in. We are all data whisperers, but in this case that’s a bad thing.

Let’s turn this around. What if we could somehow pass a bundle around without having to understand the possible semantic interpretation? In order to do that, the bundle would have to have some form that would ensure it remained unaltered by the transfer, and that defers the semantic interpretation. Furthermore, whatever is moving such bundles around cannot be surprised by their form (read like throwing an exception), so this calls for a standard. A standard we do not have. There is no widely adopted standard for messaging pieces of information, and herein lies much of the problem.

The Atoms of Data

Imagine it was possible to create atoms of data. Stable, indivisible pieces of information that can remain unchanged through transfer and duplication, and that can be put into a grander context later. The very same piece could live in a source system, or in a data product layer, or in a data pipeline, or in a data warehouse, or all of the above, looking exactly the same everywhere. Imagine there was both a storage medium and a communication protocol for such pieces. Now, let me explain how this solves many of the issues we are facing.

Let’s say you are only interested in shuffling pieces around. With atomic data pieces you are safe from mangling the message on the way. Regardless of how many times you have moved a piece around, it will have retained its original form. What could have happened in your pipelines though, is that you have dressed up your pieces with additional pieces. Adding context on the way.

Let’s say your are building an integrated enterprise-wide model. Now you are taking lots of pieces and want to understand how these fit into an integrated data model. But, the model itself is also information, so it should be able to be described using some atoms of its own. The model becomes a part of your sea of atoms, floating alongside the pieces it describes. It is no longer a piece of paper printed from some particular modeling tool. It lives and evolves along with the rest of your data.

Let’s say you are building a data product in a data mesh. Your product will shuffle pieces to data consumers, or readers may be a better word, since pieces need not be destroyed at the receiving side. Some of them may be “bare” pieces, that have not yet been dressed up with a model, some may be dressed up with a product-local model and some may have inherited their model from an enterprise-wide model. Regardless of which, if two pieces from different products are identical, they represent the same piece of information, modeled or not.

Model More Later

Now, I have not been entirely truthful in my description of the data atoms. Passing messages around in a standardized way needs some sort of structure, and whatever that structure consists of must be agreed upon. The more universal such an agreement is, the better the interoperability and the smaller the risk of misinterpreting the message. What exactly this is, the things you have to agree upon, is also a model of sorts. In other words, no messaging without at least some kind of model.

We like to model. Perhaps we even like to model a little bit too much. Let us try to forget about what we know about modeling for a little while, and instead try to find the smallest number of things we have to agree upon in order to pass a message. What, similar to a regular atom, are the elementary particles that data atoms consist of? If we can find this set of requirements and it proves to be smaller than what we usually think of when it comes to modeling, then perhaps we can model a little first and model more later.

Model Little First

As it happens, minimal modeling has been my primary interest and topic of research for the last few years. Those interested in a deeper dive can read up on transitional modeling, in which atomic data pieces are explored in detail. In essence, the whole theory rests upon a single structure; the posit.

posit_thing [{(X_thing, role_1), ..., (Y_thing, role_n)}, value, time]The posit acts as an atomic piece of data, so we will use it to illustrate the concept. It consists of some elements put together, for which it is desired to have a universal agreement, at least within the scope in which your data will be used.

- There is one or more things, like X_thing and Y_thing, and the posit itself is a thing.

- Each thing takes on a role, like role_1 to role_n, indicating how these things appear.

- There is a value, which is what appears for the things taking on these roles.

- There is a time, which is when this value is appearing.

Things, roles, values, and times are the elements of a posit, like elementary particles build up an atom. Of these, roles need modeling and less commonly, if values or times can be of complex types, they may also need modeling. If we focus on the roles, they will provide a vocabulary, and it is through these posits later gain interpretability and relatability to real events.

p01 [{(Archie, beard color)}, "red", '2001-01-01']

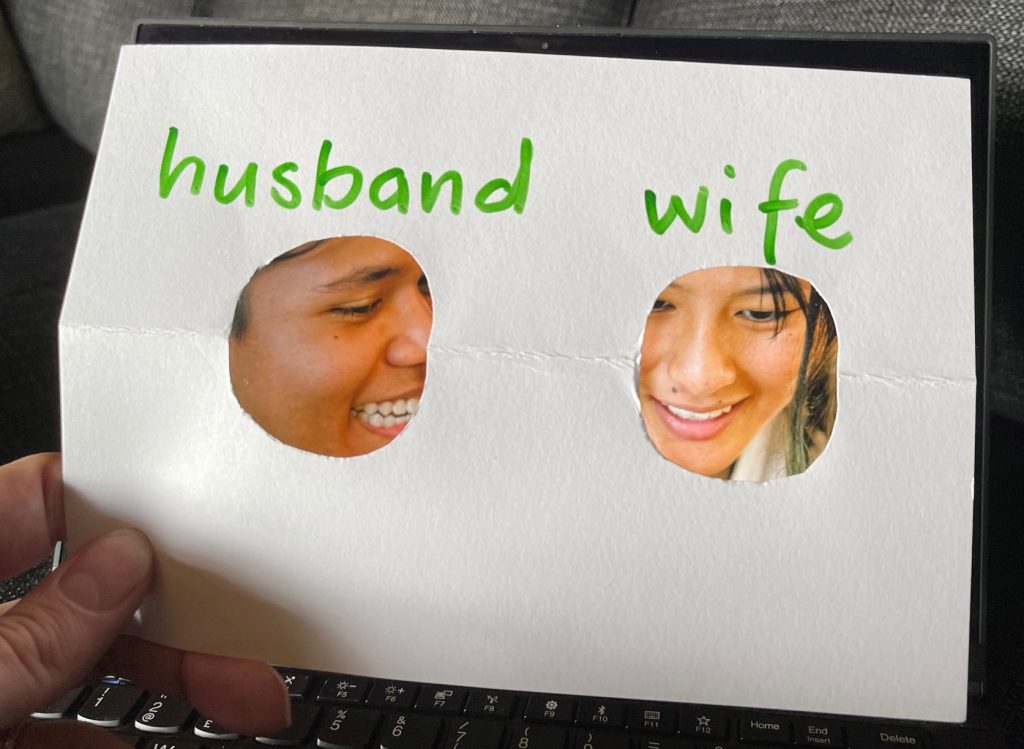

p02 [{(Archie, husband), (Bella, wife)}, "married", '2004-06-19']The two posits above could be interpreted as:

- When Archie is seen through the beard color role, the value “red” appears since ‘2001-01-01’.

- When Archie is seen through the husband role and Bella through the wife role, the value “married” appears since ‘2004-06-19’.

Noteworthy here is that both what we traditionally separate into properties and relationships is managed by the same structure. Relationships in transitional modeling are also properties, but that take several things in order to appear.

Now, the little modeling that has to be done, agreeing upon which roles to use is surely not an insurmountable task. A vocabulary of roles is also easy to document, communicate and adhere to. Then, with the little modeling out of the way, we’re on to the grander things again.

Decoupling Classification

Most modeling techniques, at least current ones, begin with entities. Having figured out the entities, a model describing them and their connections is made, and only after this model is rigidly put into database, things are added. This is where atomic data turns things upside down. With atomic data, lots of things can be added to a database first, then at some later point in time, these can be dressed up with more context, like an entity-model. The dressing up can also be left to a much smaller number of people if desired (like integration modeling experts).

p03 [{(Archie, thing), (Person, class)}, "classified", '1989-08-20']After a while I realize that I have a lot of things in the database that may have a beard color and get married, so I decide to classify these as Persons. Sometime later I also need to keep track of Golf Players.

p04 [{Archie, thing), (Golf Player, class)}, "classified", '2010-07-01']No problem here. Multiple classifications can co-exist. Maybe Archie at some point also stops playing golf.

p05 [{(Archie, thing), (Golf Player, class)}, "declassified", '2022-06-08']Again, not a problem. Classification does not have to be static. While a single long-lasting classification is desirable, I believe we have put too much emphasis on static entity-models. Loosening up classification, so that a thing can actually be seen as more than one type of entity and that classifications can expire over time will allow for models being very specific, yield much more flexibility and extend the longevity of kept data far beyond what we have seen so far. Remember that our atomic pieces are unchanged and remain, regardless of what we do with their classifications.

Multitenancy

Two departments in your organization are developing their own data products. Let us also assume that in this example it makes sense for one department to view Archie as a Person and for the other to view Archie as a Golf Player. We will call the Person department “financial” and it additionally needs to keep track of Archie’s account number. We will call the Golf Player department “member” and it additionally needs to keep track of Archie’s golf handicap. First, the posits for the account number and golf handicap are:

p06 [{(Archie, account number)}, 555-12345-42, '2018-01-01']

p07 [{(Archie, golf handicap)}, 36, '2022-05-18']These posits may live their entire lives in the different data products and never reside together, or they could be copied to temporarily live together for a particular analysis, or they could permanently be stored right next to each other in an integrated database. It does not matter. The original and any copies will remain identical. With those in place, it’s time to add information about the way each department view these.

p08 [{(p03, posit), (Financial Dept, ascertains)}, 100%, '2019-12-31']

p09 [{(p04, posit), (Member Dept, ascertains)}, 100%, '2020-01-01']

p10 [{(p06, posit), (Financial Dept, ascertains)}, 100%, '2019-12-31']

p11 [{(p07, posit), (Member Dept, ascertains)}, 75%, '2020-01-01']The posits above are called assertions, and they are metadata, since they talk about other posits. Information about information. An assertion records someone’s opinion of a posit and the value that appears is the certainty of that opinion. In the case of 100%, this corresponds to absolute certainty that whatever the posit is stating is true. The Member Department is less certain about the handicap, perhaps because the source of the data is less reliable.

Using assertions, it is possible to keep track of who thinks what in the organization. It also makes it possible to have different models for different parts of the organization. In an enterprise wide integrated model, perhaps both classifications are asserted by the Enterprise Dept, or some completely different classification is used. You have the freedom to do whatever you want.

Immutability

Atomic data only works well if the data atoms remain unchanged. You would not want to end up in a situation where a copy of a posit stored elsewhere than the original all of a sudden looks different from it. Data atoms, the posits, need to be immutable. But, we live in a world where everything is changing, all the time, and we are not infallible, so mistakes can be made.

While managing change and immutability may sound like incompatible requirements, it is possible to have both, thanks to the time in the posit and through assertions. Depending on if what you are facing is a new version or a correction it is handled differently. If the beard of Archie turns gray, this is a new version of his beard color. Recalling the posit about its original color and this new information gives us the following posits:

p01 [{(Archie, beard color)}, "red", '2001-01-01']

p12 [{(Archie, beard color)}, "gray", '2012-12-12']Comparing the two posits, a version (or natural change), occurs when they have the same things and roles, but a different value at a different time. On the other hand, if someone made a mistake entering Archie’s account number, this needs to be corrected once discovered. Let’s recall the posit with the account number and the Financial Dept’s opinion, then add new posits to handle the correction.

p06 [{(Archie, account number)}, 555-12345-42, '2018-01-01']

p10 [{(p06, posit), (Financial Dept, ascertains)}, 100%, '2019-12-31']

p13 [{(p06, posit), (Financial Dept, ascertains)}, 0%, '2022-06-08']

p14 [{(Archie, account number)}, 911-12345-42, '2018-01-01']

p15 [{(p14, posit), (Financial Dept, ascertains)}, 100%, '2022-06-08']This operation is more complicated, as it needs three new posits. First, the Financial Dept retracts its opinion about the original account number by changing its opinion to 0% certainty; complete uncertainty. For those familiar with bitemporal data, this is sometimes there referred to as a ‘logical delete’. Then a new posit is added with the correct account number, and this new posit is asserted with 100% certainty in the final posit.

Immutability takes a little bit of work, but it is necessary. Atoms cannot change their composition without becoming something else. And, as soon as something becomes something else, we are back to whispering data and inconsistencies will abound in the organization.

What’s the catch?

All of this looks absolutely great at first glance. Posits can be created anywhere in the organization provided that everyone is following the same vocabulary for the roles, after which these posits can be copied, sent around, stored, classified, dressed up with additional context, opinionated, and so on. There is, however, one catch.

Identifiers.

In the examples above we have used Archie as an identifier for some particular thing. This identifier needs to have been created somewhere. This somewhere is what owns the process of creating other things like Archie. Unless this is centralized or strictly coordinated, posits about Archie and Archie-likes cannot be created in different places. There should be a universal agreement on what thing Archie represents and no other thing may be Archie than this thing.

More likely, Archie would be stated through some kind of UID, an organizationally unique identifier. Less readable, but more likely the actual case would be:

p01 [{(9799fcf4-a47a-41b5-2d800605e695, beard color)}, "red", '2001-01-01']The requirement for the identifier of the posit itself, p01, is less demanding. A posit depends on each of its elements, so if just one bit of a posit changes, it is a different posit. The implication of this is that identifiers for posits need not be universally agreed upon, since they can be resolved within a body of information and recreated at will. Some work has to be done when reconciling posits from several sources though. We likely do not want to centralize the process of assigning identities to posits, since that would mean communicating every posit from every system to some central authority, more or less defeating the purpose of decentralization.

Conclusions

If we are to pull off something like the data mesh, there are several key features we need to have:

- Atomic data that can be passed around, copied, and stored without alteration.

- As few things as possible that need to be universally agreed upon.

- Model little first – model more later, dress up data differently by locality or time.

- Immutability so that data remains consistent across the organization.

- Versions and corrections, while still adhering to immutability.

- Centralized management for the assignment of identifiers.

As we have seen, getting all of these requires carefully designed data structures, like the posit, and a sound theory of how to apply them. With the work I have done, I believe we have both. What is still missing are the two things I asked you to imagine earlier, a storage medium and a communication protocol. I am well on the way to produce a storage medium in the form of the bareclad database engine, and a communication protocol should not be that difficult, given that we already have a syntax for expressing posits, as in the examples above.

If you, like me, think this is the way forward, please consider helping out in any way you can. The goal is to keep everything open and free, so if you get involved, expect it to be for the greater good. Get in touch!

We may have failed. But all is definitely not lost.