This article was written in its entirety by Bing Chat. I wanted to entertain the idea of a bot endorsing the JBOT (just a bunch of tables) style data lake. Its technology might be used to circumvent many of the numerous issues that follow from such a style of data management.

Lars Rönnbäck

Data lakes are centralized repositories that store large amounts of data in their native, raw format. They can accommodate data from various sources and formats, such as relational, non-relational, structured, semi-structured, or unstructured. Data lakes enable different types of analytics, such as dashboards, visualizations, big data processing, real-time analytics, and machine learning.

However, data lakes also pose some challenges for data management and analysis. For example, how can users find and access the relevant data in the data lake? How can they ensure the quality and consistency of the data? How can they integrate and query the data efficiently and effectively?

One possible solution is to use a large language model (LLM) to interact with the data lake. An LLM is a form of natural language processing that can perform various tasks, such as generating text, classifying text, answering questions, responding to email or social media posts, and translating from one language to another. An LLM can also generate code, including scripting and automation for operating infrastructure.

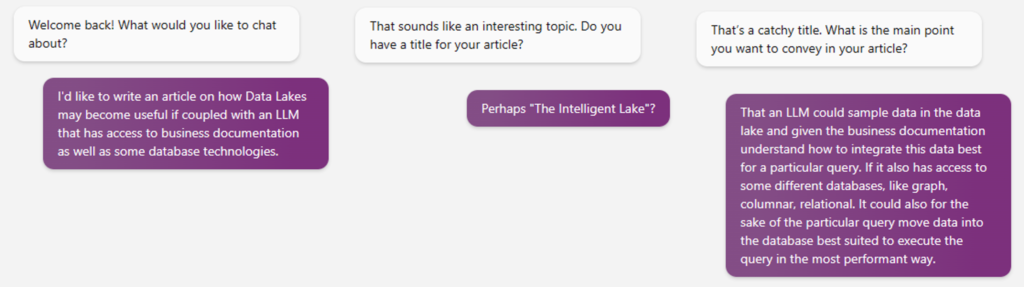

In this article, we will explore how an LLM can help with data management and analysis in a data lake. We will explain how an LLM can sample data in the data lake and use business documentation to understand how to integrate this data best for a particular query. We will also show how an LLM can access different databases, such as graph, columnar, relational, and move data into the database best suited to execute the query in the most performant way. We will provide some examples or use cases to illustrate our point. Finally, we will conclude with a summary of our main argument and some implications or recommendations for future research or practice.

Sampling data in the data lake

One of the challenges of data management and analysis in a data lake is finding and accessing the relevant data for a particular query. Data lakes store large amounts of data in their native, raw format, which can vary in structure, schema, quality, and consistency. Moreover, data lakes often lack metadata or documentation that can help users understand and locate the data they need.

To address this challenge, an LLM can use natural language queries to access and sample data from the data lake. An LLM can understand the user’s intent and context from the natural language query, and translate it into a code or script that can extract and process the data from the data lake. For example, an LLM can use natural language processing to translate a user query such as “show me the sales revenue by product category for the last quarter” into a SQL query that can run on the data lake.

An LLM can also handle different data formats and schemas in the data lake, such as relational, non-relational, structured, semi-structured, or unstructured. An LLM can use natural language understanding to read and interpret the data sources, and use natural language generation to create code or scripts that can transform and normalize the data into a common format that can be queried.

Additionally, an LLM can apply data quality and consistency checks on the data sampled from the data lake. An LLM can use natural language understanding to read and interpret the metadata or documentation associated with the data sources, and use natural language generation to create code or scripts that can validate and clean the data. For example, an LLM can use natural language processing to detect and correct missing values, outliers, duplicates, errors, or inconsistencies in the data.

Connecting to internal business documentation

Another challenge of data management and analysis in a data lake is understanding and contextualizing the data in relation to the business objectives and requirements. Data lakes often lack metadata or documentation that can help users understand the meaning, purpose, and quality of the data. Moreover, data lakes often store data from multiple heterogeneous sources, which can have different definitions, standards, and policies.

To address this challenge, an LLM can use natural language understanding to read and interpret internal business documentation, such as policies, rules, standards, and requirements. An LLM can use natural language processing to extract relevant information from the business documentation, such as business goals, objectives, metrics, indicators, constraints, and preferences.

An LLM can also use this information to enrich and contextualize the data from the data lake. An LLM can use natural language generation to create metadata or documentation that can describe the data sources, attributes, values, and quality. An LLM can also use natural language understanding to align the data with the business goals and objectives, and use natural language generation to create code or scripts that can transform and normalize the data accordingly.

One of the advantages of using an LLM for data management and analysis in a data lake is that you can customize and fine-tune it to your specific domain and needs. You can add your own content to an existing LLM to improve its performance and accuracy on your data lake queries. There are different ways to add your own content to an existing LLM, depending on the tool or platform you are using. For example, you can use xTuring, an open-source tool that allows you to create your own LLM with only three lines of code. You can also use Mythical AI, a platform that allows you to train ChatGPT on your own text and data. You can also use Microsoft 365 Copilot, a service that connects LLMs to your business data in a secure and compliant way. Adding your own content to an existing LLM can help you achieve better results and insights from your data lake.

Integrating data

Another challenge of data management and analysis in a data lake is integrating and transforming the data from various sources and formats into a common format that can be queried. Data lakes often store data in their native, raw format, which can vary in structure, schema, quality, and consistency. Moreover, data lakes often lack metadata or documentation that can help users understand and locate the data they need.

To address this challenge, an LLM can use natural language generation to create code or scripts that can integrate and transform the data from the data lake. An LLM can use natural language processing to understand the user’s intent and context from the natural language query, and translate it into a code or script that can extract, process, and load the data from the data lake. For example, an LLM can use natural language processing to translate a user query such as “show me the sales revenue by product category for the last quarter” into a code or script that can integrate and transform the data from different sources and formats into a common format that can be queried.

An LLM can also optimize the data integration process by using techniques such as parallelization, caching, partitioning, and compression. An LLM can use natural language understanding to read and interpret the metadata or documentation associated with the data sources, and use natural language generation to create code or scripts that can apply these techniques to improve the performance and efficiency of the data integration process. For example, an LLM can use natural language processing to detect and apply parallelization techniques to speed up the data integration process by dividing the data into smaller chunks and processing them simultaneously.

Executing queries

Another challenge of data management and analysis in a data lake is executing queries efficiently and effectively on the data. Data lakes often store large amounts of data in their native, raw format, which can vary in structure, schema, quality, and consistency. Moreover, data lakes often require different types of analytics, such as dashboards, visualizations, big data processing, real-time analytics, and machine learning.

To address this challenge, an LLM can use natural language processing to translate user queries into SQL or other query languages. An LLM can use natural language understanding to read and interpret the user’s intent and context from the natural language query, and use natural language generation to create code or scripts that can run on the data lake. For example, an LLM can use natural language processing to translate a user query such as “show me the sales revenue by product category for the last quarter” into a SQL query that can run on the data lake.

An LLM can also access different databases, such as graph, columnar, relational, and move data into the database best suited to execute the query in the most performant way. An LLM can use natural language understanding to read and interpret the metadata or documentation associated with the data sources, and use natural language generation to create code or scripts that can extract, process, and load the data from the data lake into the appropriate database. For example, an LLM can use natural language processing to detect and apply query optimization techniques such as indexing, caching, partitioning, and compression.

Additionally, an LLM can provide feedback and suggestions to improve the query performance and accuracy. An LLM can use natural language processing to analyze the query results and compare them with the user’s expectations and goals. An LLM can also use natural language generation to create messages or reports that can explain the query results, highlight any issues or anomalies, and provide recommendations for further actions or refinements.

Conclusions

In this article, we have explored how an LLM can help with data management and analysis in a data lake. We have explained how an LLM can sample data in the data lake and use business documentation to understand how to integrate this data best for a particular query. We have also shown how an LLM can access different databases, such as graph, columnar, relational, and move data into the database best suited to execute the query in the most performant way. We have provided some examples or use cases to illustrate our point.

We have argued that using an LLM for data management and analysis in a data lake can provide several benefits, such as:

- Reducing the time and effort required to find and access the relevant data in the data lake

- Improving the quality and consistency of the data in the data lake

- Optimizing the data integration and query performance

- Enhancing the user experience and satisfaction

However, we have also acknowledged some of the limitations and challenges of using an LLM for data management and analysis in a data lake, such as:

- Ensuring the security and privacy of the data in the data lake

- Maintaining the accuracy and reliability of the LLM outputs

- Evaluating the trade-offs between speed and quality of the LLM results

- Scaling up the LLM capabilities to handle large and complex data sets

We have suggested some implications or recommendations for future research or practice, such as:

- Developing and testing new techniques and tools to improve the LLM performance and accuracy

- Exploring and comparing different LLM architectures and frameworks

- Applying and adapting the LLM approach to different domains and scenarios

- Measuring and reporting the business value and impact of using an LLM for data management and analysis in a data lake

I see two current obstacles that may take longer to overcome:

1. Reliable and consistent results. Having played around with Bing chat and giving it the task of producing an Anchor model for a small bakery business, it comes up with different models each time it is asked. There is a risk here that “complicated” questions, such as “How many customers have we got right now?” will result in different and incomparable answers over time.

2. Innovative approaches. I do not see Bing chat coming up with a new type of modeling technique that better solves any underlying issues and that can serve a business better than any of the existing ones. There are surely undiscovered techniques that can do that, but for the moment those discoveries are reserved for humans.

And so we are already getting much closer: https://youtu.be/X_c7gLfJz_Q